Diffusion Models

Part of CS180 : Intro to Computer Vision and Computational Photography

Diffusion Model Outputs

Using DeepFloyd, we can first test out how generating images using the diffusion model works. As well as how the two stages of the model work together to generate images, and how the number of steps affects the quality of the generated images.

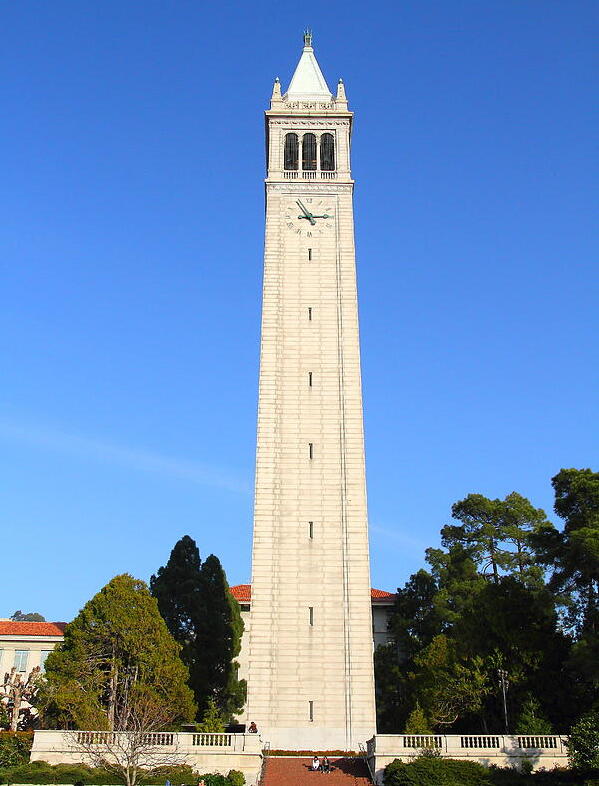

In our further testing, we will be using Berkeley’s Sather Tower (Campanile) as our test image.

Image Generation

We can notice that when stage 1’s steps remain the same, the stage 2’s steps chance the details of the image. The more steps in stage 2, the more detailed the image becomes. However, when more steps are added to stage 1, the image is denoised to a different image, such that the two results of stage 1 may not look the same anymore.

Sampling Loops

First let us start by implementing the forward process of diffusion, adding noise.

Noise Addition

Classical Denoising

The classical way we could attempt to denoise noisy images is to apply gaussian blurs. Lets us attempt that on our noised images.

Gaussian Blur

One-Step Denoising

Now we can try using our UNet to predict the noise in the image, and predict \(x_{0}\), the original image from the noised image \(x_{t}\) and our prediced noise epsilon \(\epsilon\).

One-Step Denoising

We can see that the UNet is able to denoise the image, and the denoised image is very similar to the original image, however as the noise increases, the denoised image becomes less representative of the original image.

Iterative Denoising

Now instead of just denoising the image once, we can denoise the image multiple times, and by doing so, we can see that the denoised image becomes more representative of the original image.

Iterative Denoising

Diffusion Model Sampling

Now we can pass in noise, and start at maximum noise to denoise the image iteratively all the way from the noisy image to an “original image”, we will use the prompt embedding “a high quality photo”.

Diffusion Model Sampling

Classifier Free Guidance

To improve our images, we can calculate the noise estimation for our timestep with a unconditional classifier, and use that to guide our denoising process.

Classifier Free Guidance

Image to Image Translation

Now we can take an image, add noise, then denoise it to change the image to a different image.

Image to Image Translation

Hand Drawn and Web Images

Let us do the same for hand drawn images and web images.

Hand Drawn and Web Images

Inpainting

Now if we noise a part of the image and try to inpaint it, we get the following results.

Inpainting

Text Conditioned Image to Image Translation

Now let us add some prompt embeddings while denoising these sections.

Text Conditioned Image to Image Translation

Visual Anagrams

By using the prompt embeddings and some flipping, we can generate visual anagrams by combining our noise estimates!

Visual Anagrams

Hybrid Images

Finally, we can create hybrid images by combining the low-frequency components of one image with the high-frequency components of another image.

Hybrid Images

Bells and Whistles

I wanted to design a course logo, so I generated prompt embeddings for a photo of an eye, and a photo of a cpu, and generated a hybrid image of the two. The result is shown below.

Bells and Whistles

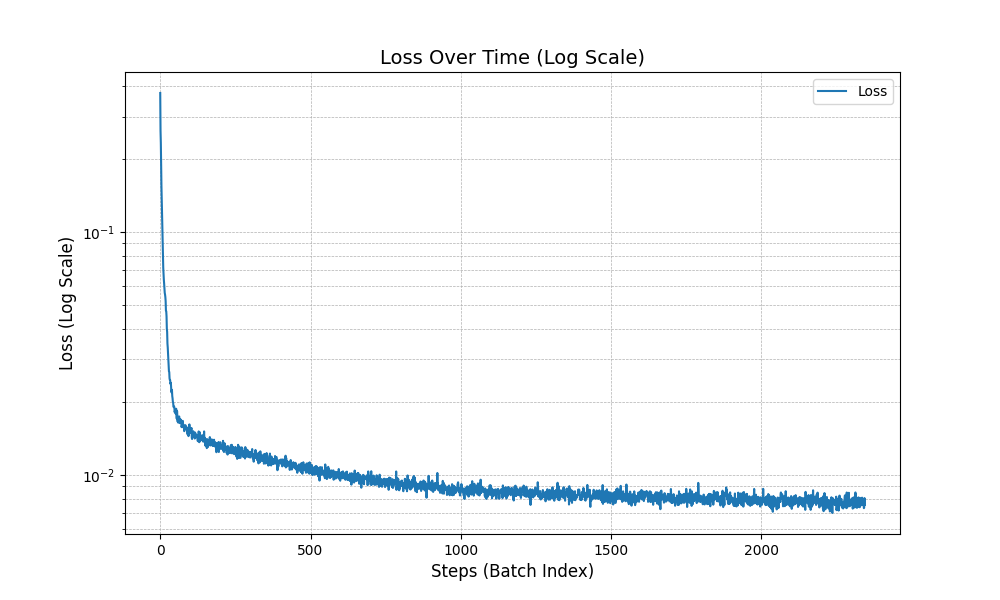

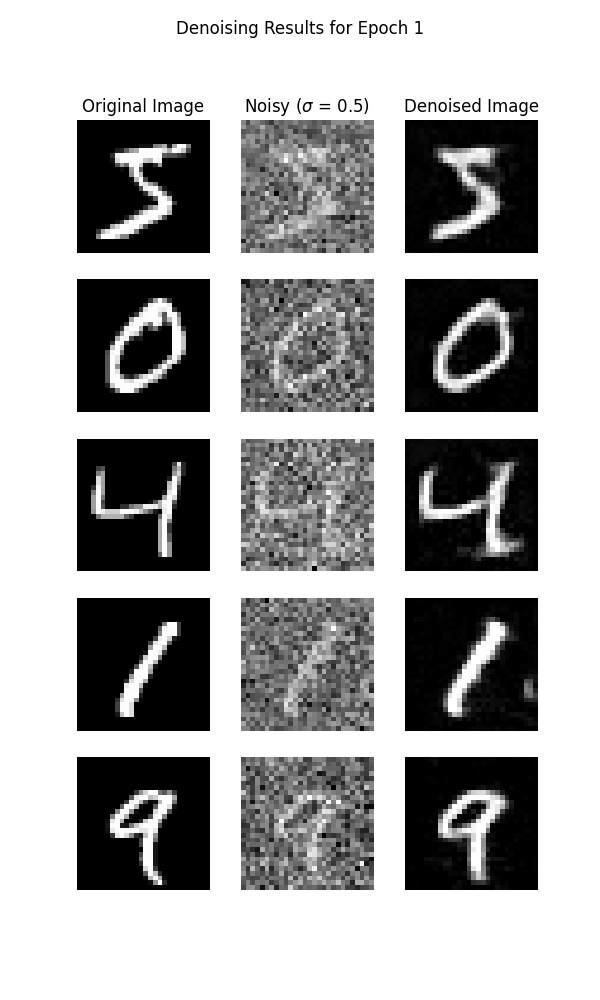

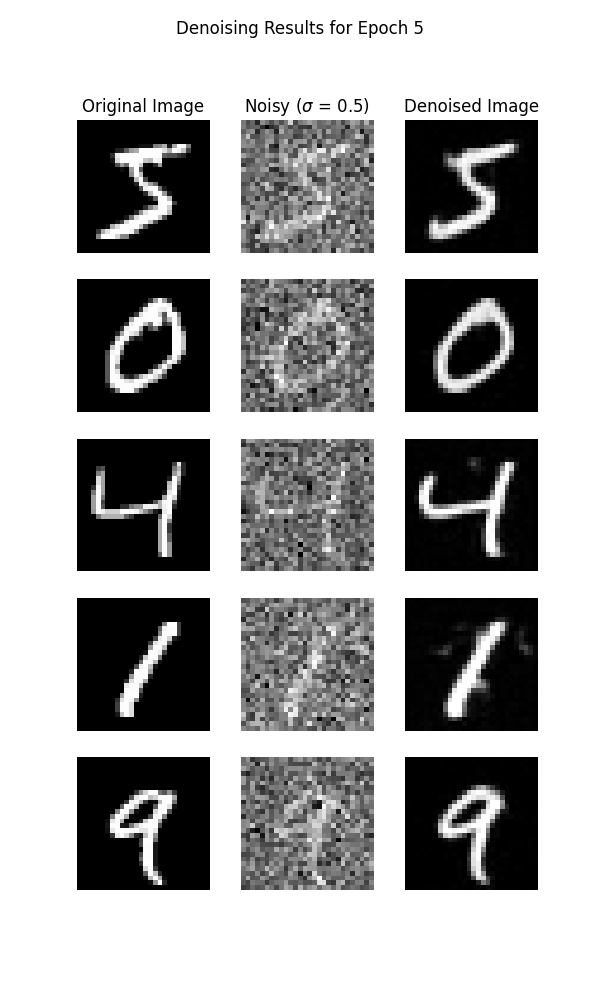

Training a Single Step Denoising Model

Now we can attempt to train a single step denoising model, we will be using the MNIST dataset for this task.

Training a Single Step Denoising Model

The results are pretty good, however what happens when we give the model an image with noise levels that it was not trained on?

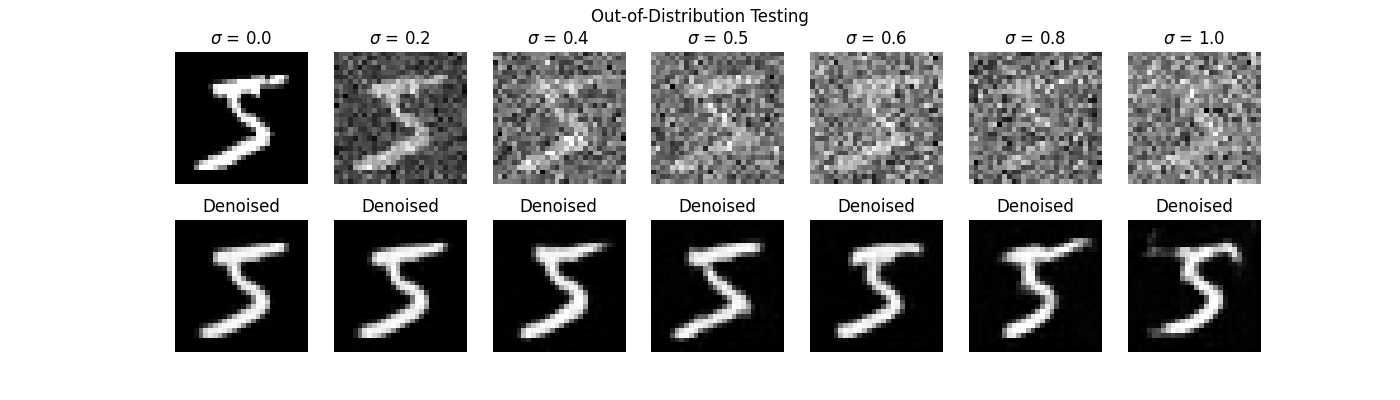

Out of Distribution Testing

That was not as good. Let us see if we can improve the model.

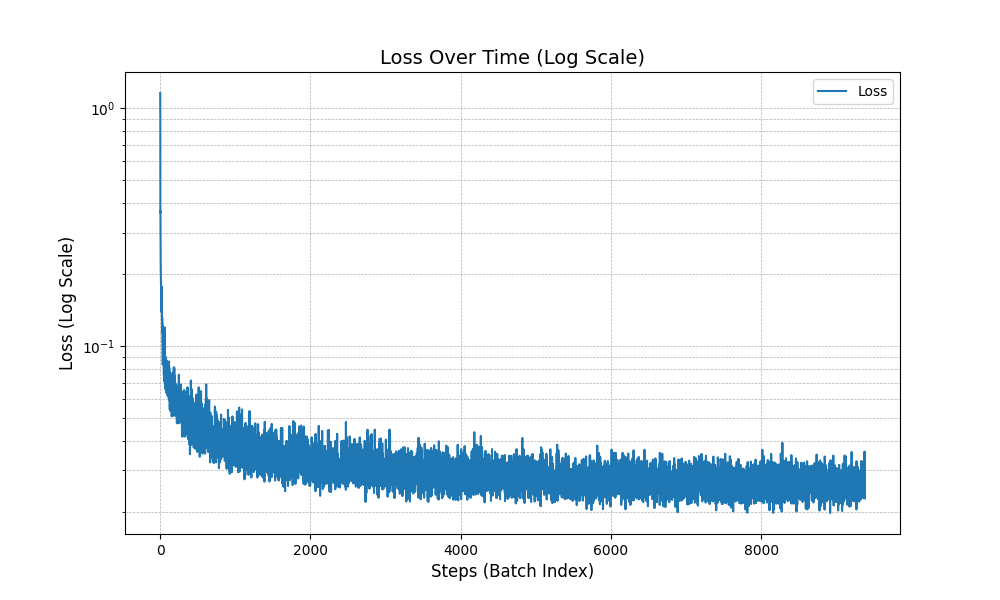

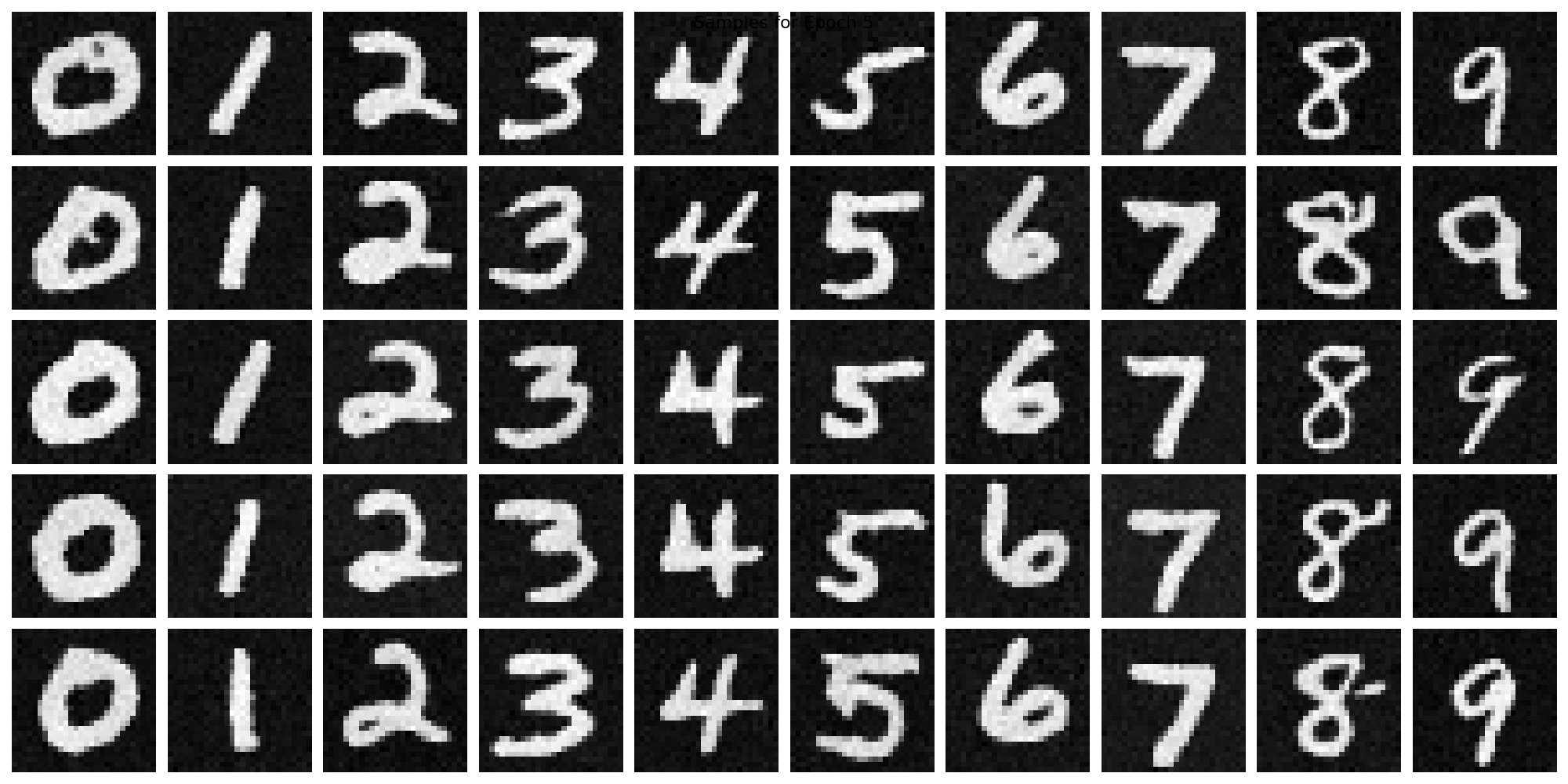

Training a Diffusion Model

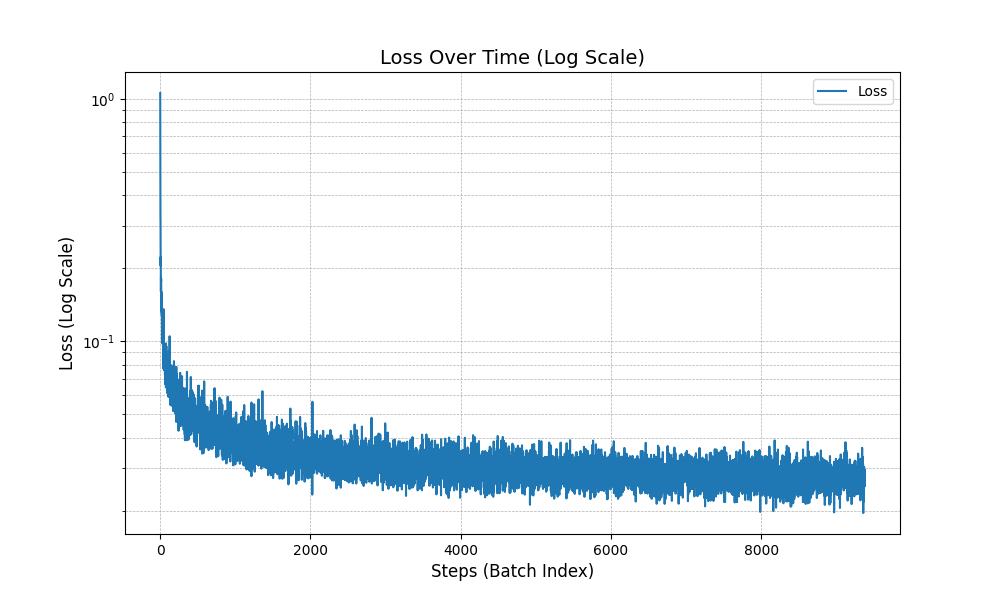

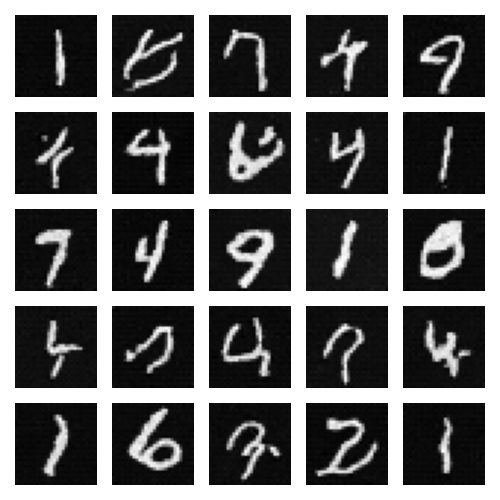

Now we will train a UNet to iteratively denoise the image, we will be using the MNIST dataset for this task.

Training a Diffusion Model

The results are much better than the single step model, however we cannot control what number class the model generates. Let us see if we can improve the model.

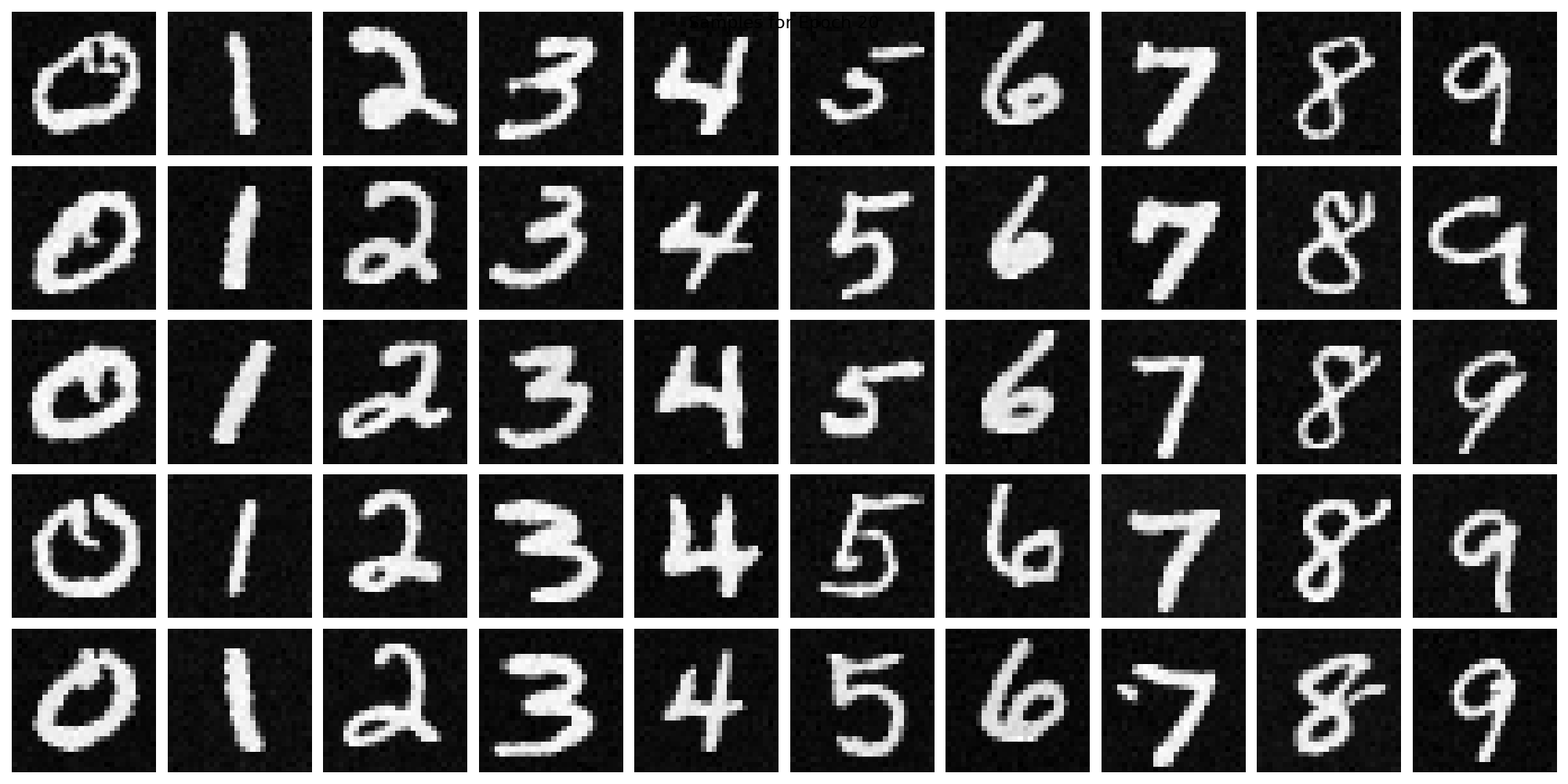

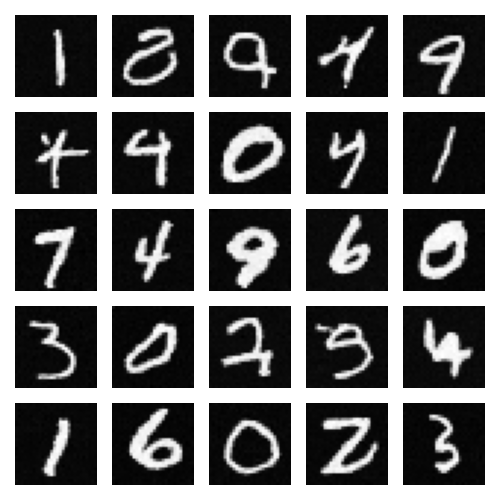

Training a Class Conditional Diffusion Model

Now we will provide the model with the class label one-hot encoded, and perform the same task as before.

Training a Class Conditional Diffusion Model