Neural Radiance Fields

Final Project in CS180 : Intro to Computer Vision and Computational Photography

Neural Radiance Fields

This project was my final project for the course CS180: Intro to Computer Vision and Computational Photography. The project was to implement Neural Radiance Fields, which is a method for synthesizing novel views of complex scenes. The method works by learning a mapping from input images to the volumetric radiance field, which is then used to render novel views.

2D Case

We begin with the humble 2D case for Neural Radiance Fields. The task is to learn a mapping from a 2D image to a 2D radiance field. The radiance field is represented as a 2D grid of colors, where each color represents the radiance at that point. The mapping is learned using a neural network, which takes in the 2D image coordinate as input and outputs the radiance field/RGB value. The network is trained using randomly sampled points from the image, and once trained the model is capable of reproducing the image via just the coordinates. The model is in essence overfitting to the training data to produce our image.

Let us take a look at our two images

2D Training

- In our LF:20, we can see that it learns the initial fox a little slower, however it gains finer details in the whisker for a very small cost in model size, and performs better than the standard at the same iteration count.

- In our FC:6, we can see that it learns the initial fox aorund the same rate as LF:20, however fine color details are not yet present. This may be due to the larger model size and the need for more iterations to learn the finer details, as we see great color details once trained for 3000 iterations

- In our HD:512, we can see that it learns the initial fox around the same rate as LF:20, and the output quality is similar to FC:6.

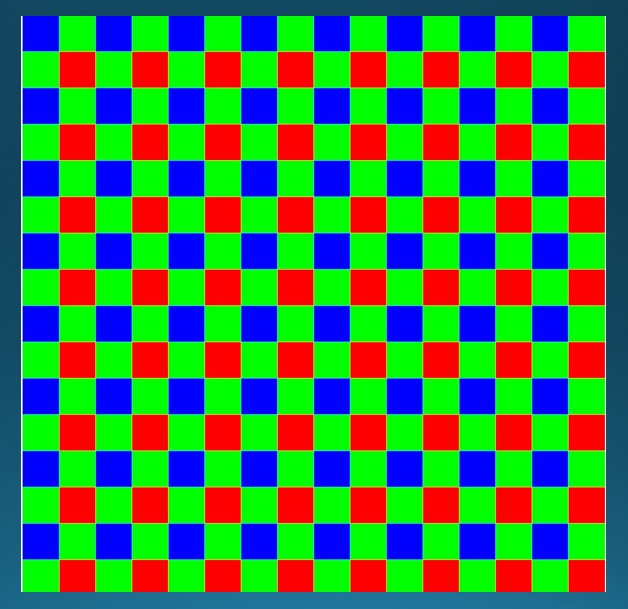

Next, I tried training an image with very visible patterns to get an understanding of different model parameters.

Bayer 2D Training

3D NeRF

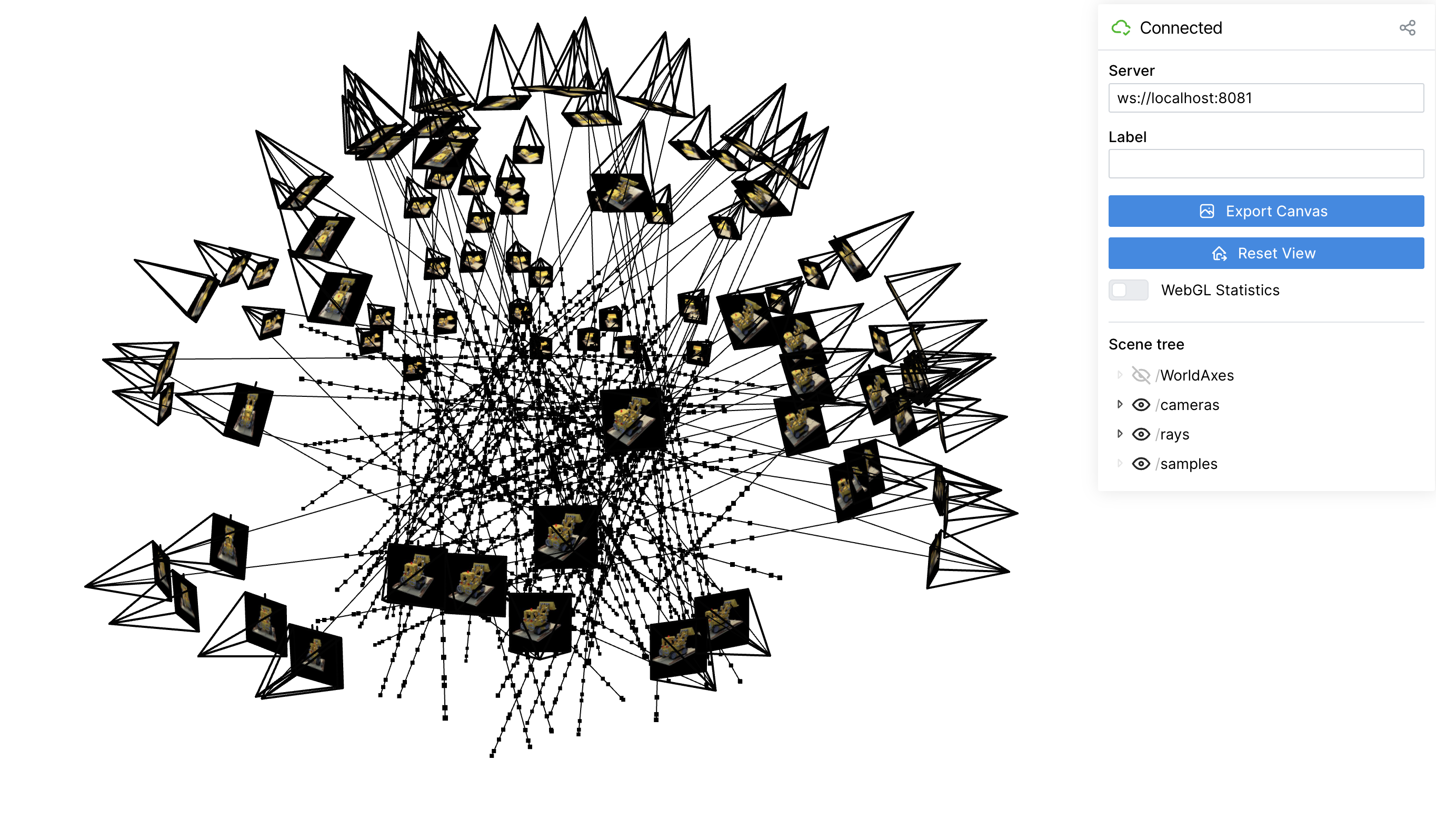

Part 2.1 To begin, I had to write functions that would convert my pixel on an image to a ray. To do so, we need to know the camera intrinsics, the camera extrinsics, and the pixel location. The camera intrinsics are the focal length, the principal point, and the image resolution. The camera extrinsics are the camera pose and the camera rotation. Then we can know our ray origin via the camera pose, and compute the direction via computing a point for a pixel on the image plane with a depth in the world space, and using the origin and coordinate to compute a unit vector. This required me to also implement a function to compute a world coordinate from a pixel on the image, and a function to convert from camera coordinates to world coordinates.

Part 2.2 Now we can being sampling, we take some random indices from our training cameras, and sample some random coordinates on those cameras. We compute their rays, and then we can sample points on those rays to get points that travel through a 3D space on the ray.

Part 2.3 Visualized, this looks like:

Part 2.4 Now we can construct our MLP in a very similar fashion to above, but we need to make sure to concatenate our positional encodings deeper into the network to get better performance.

Part 2.5 Now we can implement the core voluming equation, which goes over the ray and adds small iterations of each step’s color to the final output color.

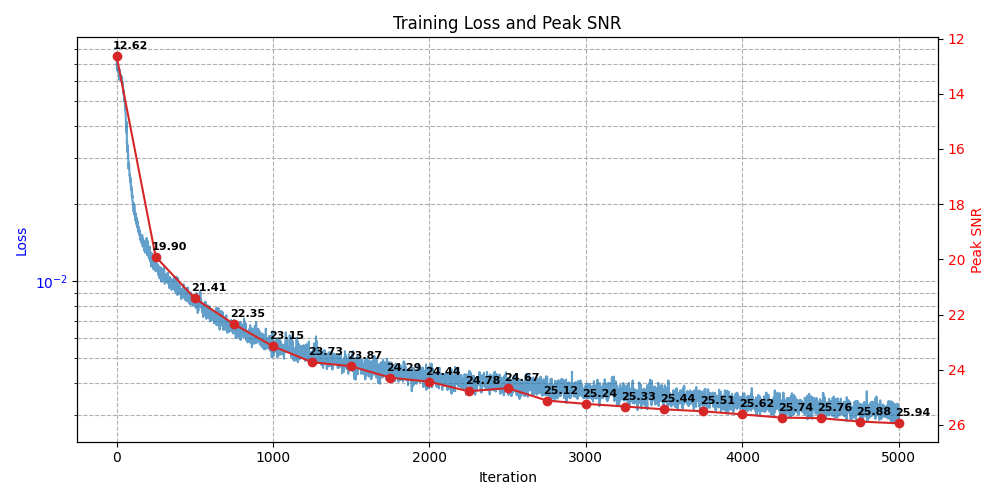

With that we can construct our NeRF model and train it on our Lego dataset! We sample 10,000 points each iteration, with 64 samples on each ray, and after computing the expected color for each point, we compute the cumulative color of the ray and use that to compute the loss. We then backpropagate and update our model.

Using this, we can achieve some impressive results!

Lego NeRF Training

Here is the rendering of a novel view not in the training dataset!

Bells and Whistles

And when we make some changes to our rendering to use our opacity to interpret when rays are not hitting any objects, we can get the following: